How To Create Analog And Digital Clocks in an Android Application using Eclipse:

In this tutorial, you will be learning How to display Toggle Button in an activity

Here you go!!!!

How to get android api version programmatically

There is different ways to get that programmatically .

First method .

The SDK level (integer) the phone is running is available in:

android.os.Build.VERSION.SDK_INT;

** UPDATE: Android Complete tutorial now available here.

The enum corresponding to this int is in the android.os.Build.VERSION_CODES class.

int currentapiVersion = android.os.Build.VERSION.SDK_INT;

if (currentapiVersion < = android.os.Build.VERSION_CODES.FROYO)

{

// Do something for froyo and above versions

}

else

{

// do something for phones running an SDK before froyo

}

Read More

Installing HTC Incredible Android SDK Drivers

In order to debug on your new HTC Incredible smartphone on Windows 7, you need to install USB drivers from Google. Well, it turns out that the phone is too new for Google to have included support for the Incredible in their driver package. Here is how I got it all working though. It may or may not work for you.

1.) Install the Android SDK and download the USB drivers into a folder inside your SDK as Google tells you to do. My drivers ended up in C:\android-sdk-windows\usb_driver

2.) You next need to hack the file android_winusb.inf to add support for the HTC Incredible.

Find the section labeled [Google.NTx86]. At the end of that section, add the following lines.

; ;HTC Incredible %SingleAdbInterface% = USB_Install, USB\VID_0BB4&PID_0C9E %CompositeAdbInterface% = USB_Install, USB\VID_0BB4&PID_0C9E&MI_01

Find the section [Google.NTamd64]. At the end of that section, add the following lines.

; ;HTC Incredible %SingleAdbInterface% = USB_Install, USB\VID_0BB4&PID_0C9E %CompositeAdbInterface% = USB_Install, USB\VID_0BB4&PID_0C9E&MI_01

On your Incredible, go to Settings->Applications->Development and turn on USB debugging. NOW, you can connect your phone to the PC.

On your PC, Go to Start->Right-Click My Computer->Manage

You should see a device with a warning on it called Other->ADB. Right-click it and choose Update Driver Software… Install the drivers manually and point that to your usb_drivers folder. Ignore any warnings about unsigned drivers and everything should install just fine. After installation, I see Android Phone->Android Composite ADB Interface in the Device Manager.

After that, I went to the cmd prompt and typed

>adb devices * daemon not running. starting it now * * daemon started successfully * List of devices attached HT048HJ00425 device

Read More

How to manually upgrade an Android smart phone or tablet

We all want the most up-to-date gadgets and therefore the most recent versions of the software that is available for them. Upgrading an Android device might take you to a newer version of the operating system like 4.0 Ice Cream Sandwich or bring new features or enhancements for your smart phone or tablet.

** UPDATE: Android Complete tutorial now available here.

Upgrades for Android devices are generally available over-the-air (OTA) which avoid the need for cables and a PC. They also rolled out gradually and will depend on the manufacturer and mobile operator. You may receive a notification about an upgrade but you can also manually check and upgrade your device.

Follow the below steps to manually update an Android smart phone or tablet.

Step 1: As a precautionary measure its good practice to back up your data such as contacts and photos since the upgrade should not affect your data but there is no guarantee.

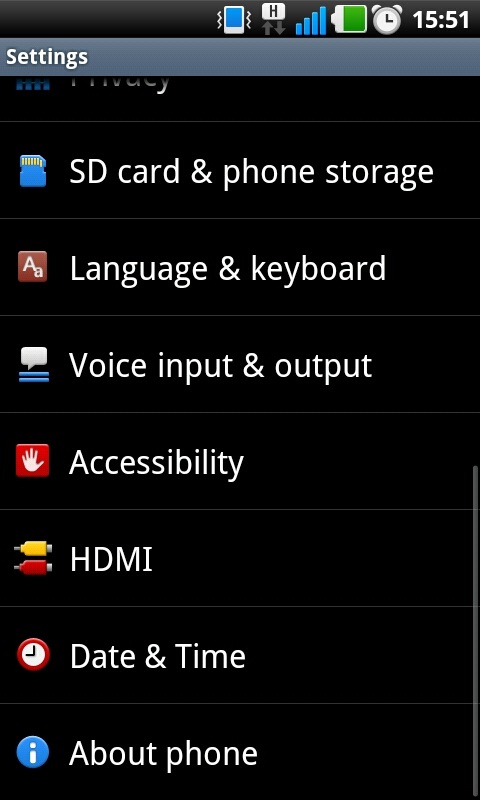

Step 2: Navigate to the Setting menu of your device. On any Android device this can be done via the app menu. Typically the Setting app will have a cog or spanner logo.

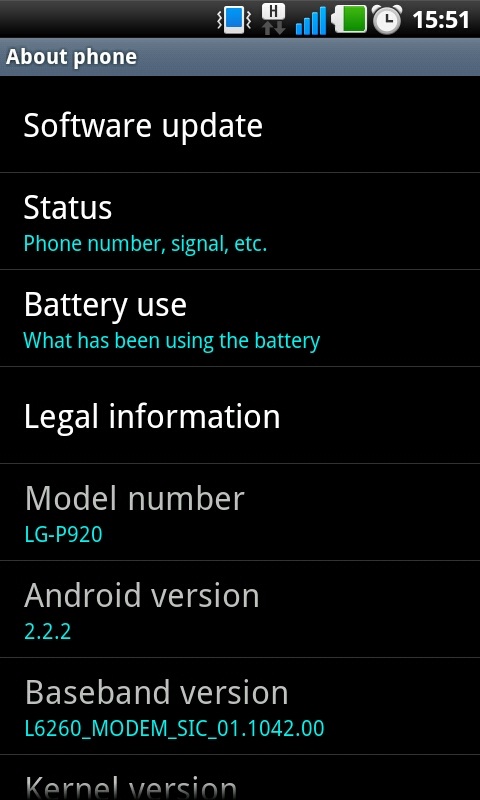

Step 3: Scroll down the Setting menu a click on ‘About Phone’ or ‘About Tablet’. This section of the menu will detail which version of Android your device is running.

Step 4: The menu can vary slightly from device to device but click the ‘Software Update’ or similar button.

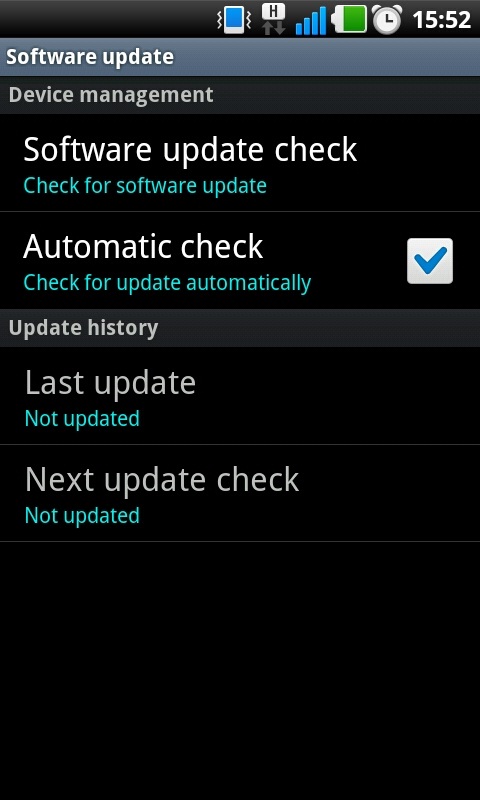

Step 5: Your phone or tablet will now search for an available update. If you are taken to another menu, select the ‘Software update check’ button or similar.

If an update is available your device then you will be asked whether you wish to install it. If you select yes then the system will download and install the new software and reboot.

Note: You device may require a Wi-Fi connection to search for an update. We also recommend downloading the software over Wi-Fi because the file size can be large.

How to Find IP Address of Android SmartPhone?

You may need your android phone’s IP address for creating a media center remote, or wireless file server, there are a number of cell phone and computer applications that need your smart phone’s IP address.

There are two kinds of IP Addresses.

Internal IP address is the address of the device which is assigned by the router from which your phone is connected.

External IP address is the address of the device assigned by the ISP (Internet Service provider).

** UPDATE: Android Complete tutorial now available here.

Finding your Phone’s IP address can be real difficult job, but it’s not. Below are some of the ways you can find yours android phone’s IP.

1. From the phone

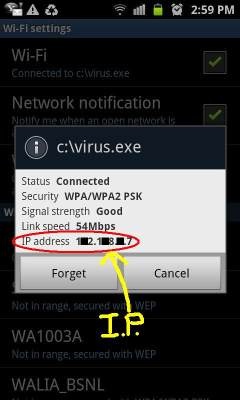

Follow Settings >> Wireless Controls >> Wi-Fi settings and tap on the network you are connected to. It’ll pop up a dialog with network status, speed, signal strength, security type and IP address.

2. From Web browser of Phone

Finding your global IP address can be done the same on all smartphone brands. Simply point your mobile phone’s web browser to . Alternative websites are CmyIP.com and touch.WhatsMyIP.org.

Below is the sample image of how it appears.

Read MoreHow to configure the Android emulator to simulate the Galaxy Nexus?

What settings for the Android emulator will closely simulate the characteristics of the Galaxy Nexus as possible?

Solution:

You can very well go through the procedures available below to simulate the characteristics of NEXUS,

- Target: Google APIs – API Level 15

- Skin: Built-in WXGA720

Selecting skin sets the following hardware parameters, leave them as-is:

- Hardware Back/Home: no

- Abstracted LCD density: 320

- Keyboard lid support: no

- Max VM application heap size: 48

- Device ram size: 1024

Galaxy Nexus has no SD card, just internal memory. Distinction between internal and external storage is important and can affect apps. To simulate this:

- add SD Card support=no parameter;

- launch emulator with -partition-size 1024 for 1GB internal memory, or use some other means to increase amount of internal memory available;

If you’re working on camera apps, you’ll also want to set correct number of cameras, and correct resolution.

Read More